The Mer Wiki now uses your Mer user account and password (create account on https://bugs.merproject.org/)

Quality/QA-tools/Testrunner-lite

m (→Testrunner-lite and test case verdicts) |

m (→Testrunner-lite) |

||

| (One intermediate revision by one user not shown) | |||

| Line 1: | Line 1: | ||

= Testrunner-lite = | = Testrunner-lite = | ||

| − | Testrunner-lite is a lightweight tool for test execution, which reads xml files as input, and produces [http://gitorious.org/test-execution-tools/test-definition/blobs/master/src/data/testdefinition-results.xsd a common-format] test result file which can be published to for example qa-reports. With testrunner-lite, you can | + | Testrunner-lite is a lightweight tool for test execution, which reads [[Quality/TestDefinitionHowTo|xml files]] as input, and produces [http://gitorious.org/test-execution-tools/test-definition/blobs/master/src/data/testdefinition-results.xsd a common-format] test result file which can be published to for example qa-reports. With testrunner-lite, you can |

* Execute automatic, semi-automatic and manual test cases | * Execute automatic, semi-automatic and manual test cases | ||

* Execute tests locally or in host-based mode by yourself or as a part of test automation system | * Execute tests locally or in host-based mode by yourself or as a part of test automation system | ||

| Line 244: | Line 244: | ||

* [http://meego.gitorious.org/test-execution-tools/testrunner-lite Source codes in Gitorious] | * [http://meego.gitorious.org/test-execution-tools/testrunner-lite Source codes in Gitorious] | ||

* [https://bugs.merproject.org/buglist.cgi?product=Mer%20QA&component=testrunner-lite&resolution=--- Bugs are tracked in Mer bugzilla] | * [https://bugs.merproject.org/buglist.cgi?product=Mer%20QA&component=testrunner-lite&resolution=--- Bugs are tracked in Mer bugzilla] | ||

| + | |||

| + | == Reimplementation in Python discussion == | ||

| + | |||

| + | There has been discussion if testrunner-lite should be rewritten in Python. Main motivation for this has been to allow better integration of it to test automation (ots). Pros and cons for doing this listed below (feel free to add your views) | ||

| + | |||

| + | '''Pros''' | ||

| + | * Ingration to test automation machinery (which is mainly written in Python) | ||

| + | * Easier to maintain and faster to make changes (ymmv) | ||

| + | |||

| + | '''Cons''' | ||

| + | * current implementation is thoroughly tested in continous production use | ||

| + | * c implementation has fewer dependencies | ||

| + | * c implementation has better performance (it's called 'lite' for a reason) | ||

| + | * c implementation is better suited for running the tool on device | ||

| + | |||

| + | '''alternative solutions''' | ||

| + | * turning testrunner-lite into a library which allows making python bindings and compiling the functionality into e.g. testrunner-ui | ||

Latest revision as of 07:56, 20 July 2012

Contents |

[edit] Testrunner-lite

Testrunner-lite is a lightweight tool for test execution, which reads xml files as input, and produces a common-format test result file which can be published to for example qa-reports. With testrunner-lite, you can

- Execute automatic, semi-automatic and manual test cases

- Execute tests locally or in host-based mode by yourself or as a part of test automation system

- Use options and filters to select the test cases to be executed

- Validate the used test plan file automatically

See our demo videos at Youtube:

- Demo of new libssh2 feature in version 1.5.0

- Proof of concept of parallel execution

- Demo of version 1.3.17

- Demo of version 1.3.11

- Demo of version 1.3.10

- MeeGo QA tools Youtube channel (not updated anymore)

There is also a graphical user interface version of the tool called testrunner-ui available.

[edit] Usage

The program is executed from command line

/usr/bin/testrunner-lite [options]

Options

-h, --help

Show this help message and exit.

-V, --version

Display version and exit.

-f FILE, --file=FILE

Input file with test definitions in XML (required).

-o FILE, --output=FILE

Output file for test results (required).

-r FORMAT, --format=FORMAT

Output file format. FORMAT can be xml or text. Default: xml

-e ENVIRONMENT, --environment=ENVIRONMENT

Target test environment. Default: hardware

-v, -vv, --verbose[={INFO|DEBUG}]

Enable verbosity mode; -v and --verbose=INFO are equivalent outputting INFO, ERROR and WARNING messages.

Similarly -vv and --verbose=DEBUG are equivalent, outputting also debug messages. Default behaviour is silent mode.

-L, --logger=URL

Remote HTTP logger for log messages. Log messages are sent to given URL in a HTTP POST message.

URL format is [http://]host[:port][/path/], where host may be a hostname or an IPv4 address.

-a, --automatic

Enable only automatic tests to be executed.

-m, --manual

Enable only manual tests to be executed.

-l FILTER, --filter=FILTER

Filtering option to select tests (not) to be executed. E.g. '-testcase=bad_test -type=unknown' first disables

test case named as bad_test. Next, all tests with type unknown are disabled. The remaining tests will be

executed. (Currently supported filter type are: testset,testcase,requirement,feature and type)

-c, --ci

Disable validation of test definition against schema.

-s, --semantic

Enable validation of test definition against stricter (semantics) schema.

-A, --validate-only

Do only input xml validation, do not execute tests.

-H, --no-hwinfo

Skip hwinfo obtaining.

-P, --print-step-output

Output standard streams from programs started in steps

-S, --syslog

Write log messages also to syslog.

-M, --disable-measurement-verdict

Do not fail cases based on measurement data

--measure-power

Perform current measurement with hat_ctrl tool during execution of test cases

-u URL, --vcs-url=URL

Causes testrunner-lite to write the given VCS URL to results.

-U URL, --package-url=URL

Causes testrunner-lite to write the given package URL to results.

--logid=ID

User defined identifier for HTTP log messages.

-d PATH, --rich-core-dumps=PATH

Save rich-core dumps. PATH is the location, where rich-core dumps are produced in the device. Creates UUID mappings between executed

test cases and generated rich-core dumps. This makes possible to link each rich-cores and test cases in test reporting

NOTE: This feature requires working sp-rich-core package to be installed in the Device Under Test.

Test commands are executed locally by default. Alternatively, one

of the following executors can be used:

Chroot Execution:

-C PATH, --chroot=PATH

Run tests inside a chroot environment. Note that this doesn't change the root of the testrunner itself,

only the tests will have the new root folder set.

Host-based SSH Execution:

-t [USER@]ADDRESS[:PORT], --target=[USER@]ADDRESS[:PORT]

Enable host-based testing. If given, commands are executed from test control PC (host) side. ADDRESS is the ipv4 address

of the system under test. Behind the scenes, host-based testing uses the external execution described below with SSH

and SCP.

-R[ACTION], --resume[=ACTION]

Resume testrun when ssh connection failure occurs.

The possible ACTIONs after resume are:

exit Exit after current test set

continue Continue normally to the next test set

The default action is 'exit'.

-i [USER@]ADDRESS[:PORT], --hwinfo-target=[USER@]ADDRESS[:PORT]

Obtain hwinfo remotely. Hwinfo is usually obtained locally or in case of host-based testing from target address. This option

overrides target address when hwinfo is obtained. Usage is similar to -t option.

-k KEY, --ssh-key=KEY

path to SSH private key file

Libssh2 Execution:

-n [USER@]ADDRESS, --libssh2=[USER@]ADDRESS

Run host based testing with native ssh (libssh2) EXPERIMENTAL

External Execution:

-E EXECUTOR, --executor=EXECUTOR

Use an external command to execute test commands on the system under test. The external command must accept a test

command as a single additional argument and exit with the status of the test command. For example, an external executor

that uses SSH to execute test commands could be "/usr/bin/ssh user@target".

-G GETTER, --getter=GETTER

Use an external command to get files from the system under test. The external getter should contain <FILE> and <DEST>

(with the brackets) where <FILE> will be replaced with the path to the file on the system under test and <DEST> will be

replaced with the destination directory on the host. If <FILE> and <DEST> are not specified, they will be appended

automatically. For example, an external getter that uses SCP to retrieve files could be "/usr/bin/scp target:'<FILE>' '<DEST>'".

[edit] Filtering options

Filtering options allow selecting which tests are to be executed. Filtering options are given as a string. String may contain one or more filter entries. Format of each entry is 'filtername=values' where 'filtername' typically corresponds to an attribute in Test Definition XML and 'values' is a string to which the filter should match. Multiple values can be specified if separated by comma (without extra space). A value containing space must be enclosed in double quotes (""). Current list of forbidden characters in values is as follows: Single quote ('), double quote ("), is-equal-to sign (=), comma (,).

How filtering is carried out: Before processing the filter options, all tests are active by default. Filters can only deactivate tests: If filter value does not match with the value of the corresponding attribute, the test is disabled. Each filter entry is applied for all the active tests in the test package, one after another, in the given order. Filtering is always carried out at the lowest level of the "suite-set-case-step" hierarchy where the corresponding attribute can be defined. Note that some attributes may inherit the value from the upper level. Please refer to Test Definition XML for details.

If 'filtername' is prefixed with dash (-), the filter behaviour is reversed: those tests for which the filter value does match, are disabled.

'filtername' can be any of the following:

* feature * requirement * testset * type * testcase

The following example does the following: First it disables all test cases except those with attribute type='Integration'. Next, a test case named as Bad Test is disabled. The tests left active will be executed.

testrunner-lite -f tests.xml -o ~/results -l 'type=Integration -testcase="Bad Test"'

The following example executes test cases that specify the requirement attribute with a value containing at least one of the following strings: '50001', '50002', '50003'.

testrunner-lite -f tests.xml -o ~/results -l 'requirement=50001,50002,50003'

Note that each key=values is handled as a separate filter, when checking whether a test case should be filtered. The following example will filter all the test cases,

testrunner-lite -f tests.xml -o ~/results -l 'testset=set1 testset=set2'

whereas the following will accept tests from test sets "set1" and "set2".

testrunner-lite -f tests.xml -o ~/results -l 'testset=set1,set2'

[edit] Manual Test Cases

It is also possible to execute manual test cases using testrunner-lite, as defined in Test Definition XML.

In case manual test case is encountered during execution, testrunner-lite will go through the defined test steps and ask user whether the step is passed or failed. The test case will terminate at the first failure, otherwise every step defined will be executed. After the test case is done, user has the option to enter additional comments.

Example output when running manual case:

[INFO] 15:15:53 Starting test case: ExampleTestCase --- Execute test step --- Description: Open calculator. Expected result: calculator opens up. Please enter the result ([P/p]ass or [F/f]ail): P --- Execute test step --- Description: Stop calculator Please enter the result ([P/p]ass or [F/f]ail): P --- Test steps executed, case is PASSED --- Please enter additional comments (ENTER to finish): Execution was slow. [INFO] 15:16:41 Finished test case. Result: PASS

[edit] Testrunner-lite and test case verdicts

For manual cases the result is naturally set by the user. For automatic cases a result of a test case is set as follows:

- PASS

- all test steps finished with expected results

- FAIL

- test step has unexpected return value

- test step timed out

- pre-steps on the set the case belongs to have failed

- N/A

- test case has state=Design in the tests.xml

- test case has no steps

If a test case is filtered it doesn't get any result; meaning it is not written to results file at all.

[edit] About process control

Each test step is executed in a separate shell. Testrunner-lite spawns new process for the execution, and waits for the step to finish (or timeout). In case test step contains command that is started to background, the step returns immediately. After test case has finnished a cleanup routine is executed, where testrunner-lite tries to kill all processes that may have been left running by the test steps. Cleanup for pre-steps and post-steps is done after the post steps are executed (i.e. when test set has been executed).

[edit] About host based execution

Testrunner-lite supports host based execution, where testrunner-lite is executed on a PC, and the test steps over ssh on hardware. This requires that key based ssh authentication is enabled between device and host.

Remember that each single step in pre_steps, post_steps or inside testcases is executed in a separate SSH session, so you'll have to make sure that if your steps leave some processes running in the background which inherits its pipes straight from the parent shell, the SSH connection will hang until those processes are terminated (or until they close their pipes). Therefore, you should always redirect the stdout, stderr and stdin streams of your background processes, if you don't want your test steps / pre- / post steps to time out in host-based execution.

See http://www.snailbook.com/faq/background-jobs.auto.html for more information.

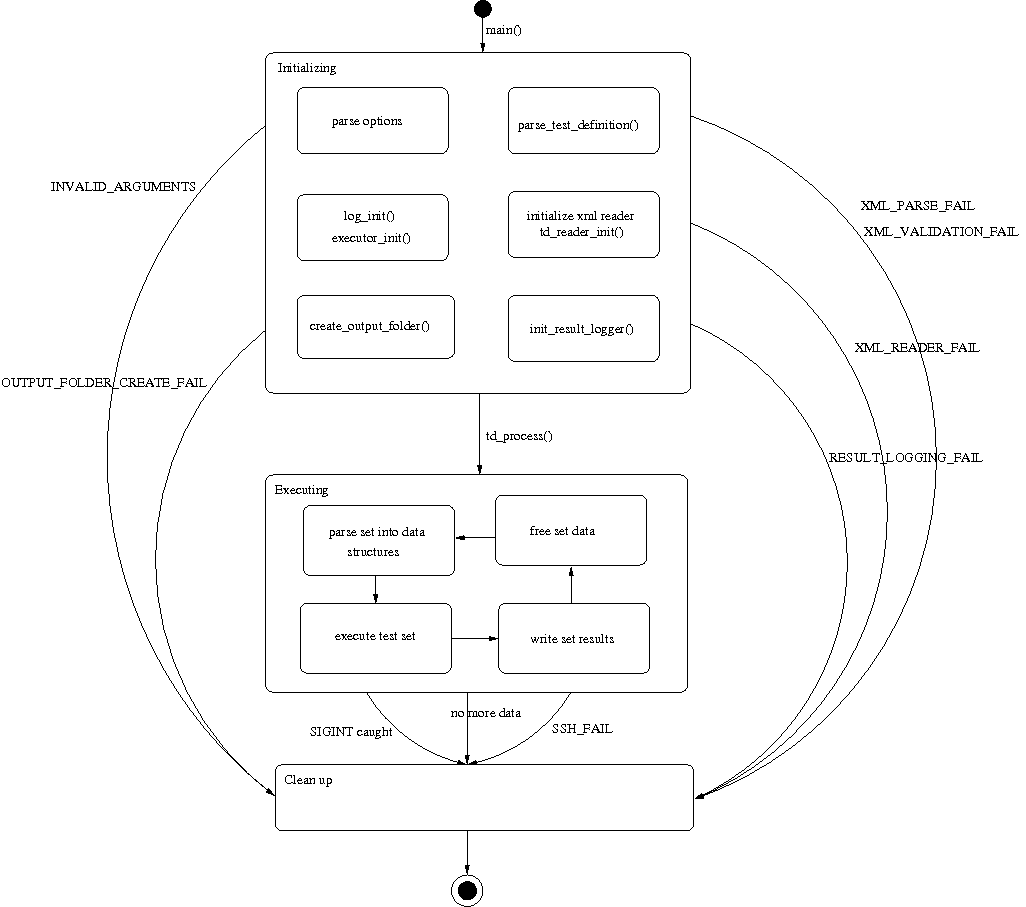

[edit] Testrunner-lite state machine

Below is a simplified picture describing the operation of testrunner-lite. The program flow can be considered to consist of three phases: initializing, executing and clean up. The execution is done set by set, so that the tool needs to maintain only one set at a time in its memory.

xfig file: File:Testrunnerlitesm.fig

[edit] Development

[edit] Reimplementation in Python discussion

There has been discussion if testrunner-lite should be rewritten in Python. Main motivation for this has been to allow better integration of it to test automation (ots). Pros and cons for doing this listed below (feel free to add your views)

Pros

- Ingration to test automation machinery (which is mainly written in Python)

- Easier to maintain and faster to make changes (ymmv)

Cons

- current implementation is thoroughly tested in continous production use

- c implementation has fewer dependencies

- c implementation has better performance (it's called 'lite' for a reason)

- c implementation is better suited for running the tool on device

alternative solutions

- turning testrunner-lite into a library which allows making python bindings and compiling the functionality into e.g. testrunner-ui